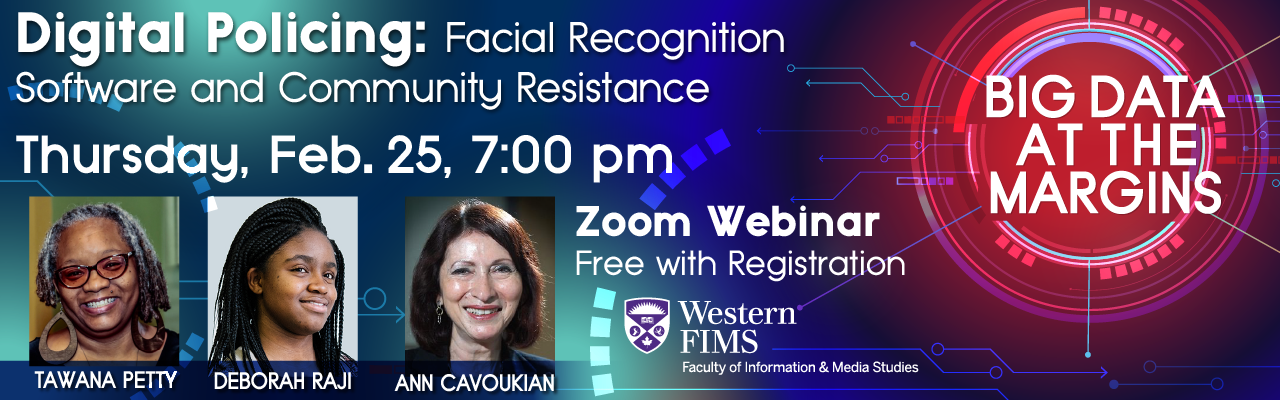

Digital Policing: Facial Recognition Software and Community Resistance

Featuring: Featuring Tawana Petty, Deborah Raji, and Ann Cavoukian.

Make sure to register for our next free Zoom Webinar, open to everyone at Western and beyond: click here.

How can we come together to push back on the deployment of facial recognition technologies by police forces, schools, and other civic institutions? What are the best strategies for the successful abolition of these and other carceral technologies?

The second in our Big Data at the Margins series examines how the digitization and datafication of the criminal Justice system has intersected with the development and deployment of AI-driven technologies like facial recognition and predictive policing. Police forces in Canada have been eager to use facial recognition to identify and arrest, raising major concerns surrounding data privacy and the civil rights of the accused. Civil society activists ranging from the Water Protectors of Standing Rock to the Black Lives Matter activists of this past summer’s uprisings against policy brutality and the carceral have been similarly targeted for FRT surveillance by law enforcement authorities. And algorithms used in the US criminal justice system to predict recidivism have drawn international condemnation for their potential for bias against Black defendants. This intensification of policing via digital tools has been met by stiff resistance by communities across North America, calling not only for many of these technologies to be banned, but also for the broader dismantling of the irredeemably racist elements of the carceral state.

Our internationally recognized panelists will address the impacts of facial recognition technologies on individual privacy, the perpetuation of algorithmic bias against already disadvantaged groups, and the struggle for community data justice. The award-wining work of Deborah Raji, incoming Mozilla Fellow and collaborator with the Algorithmic Justice League, has highlighted the racial bias intrinsic to computer vision systems. Ann Cavoukian, former Information & Privacy Commissioner of Ontario and one of the world’s leading privacy experts, has pioneered Privacy by Design, a framework to ensure human privacy is protected via digital technologies. And Tawana “Honeycomb” Petty, co-founder of Our Data Bodies and former director of the Data Justice Program at Detroit Community Technology Project, is one of North America’s foremost community advocates and social justice activists pushing both for the abolition of carceral technologies and for grassroots community-led digital life.